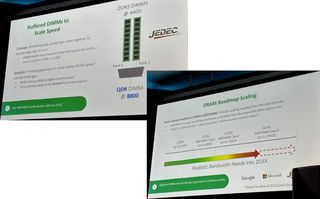

MRDIMMs (multi-ranked buffered DIMMs) could be the standard among buffered DIMMs by 203x. In addition, AMD (opens in new tab) has voiced its commitment at MemCon 2023 to help push JEDEC's MRDIMM open standard, which will significantly boost bandwidth over standard DDR5 DIMMs.

It has been a constant struggle to feed processors with the necessary memory bandwidth as core counts continue to rise. It's one of the reasons why AMD and Intel have shifted over to DDR5 memory on their mainstream processors, such as Ryzen 7000 and Raptor Lake. So you can imagine the challenge in the data center segment with AMD's EPYC Genoa and Intel's Sapphire Rapids Xeon chips pushing up to 96 cores and 60 cores, respectively.

It gets even more complicated when you slot these multi-core EPYC and Xeon monsters in a 2P or sometimes a 4P configuration. The result is a gargantuan motherboard with an insane number of memory slots. Unfortunately, motherboards can only get so big, and processors keep debuting with more cores. Existing solutions exist, such as unique interfaces like the Compute Express Link (CXL) or High Bandwidth Memory (HBM) formats. MRDIMM aims to be another option for vendors to mitigate the difficulties associated with DRAM speed scaling.

MRDIMM's objective is to double the bandwidth with existing DDR5 DIMMs. The concept is simple: combine two DDR5 DIMMs to deliver twice the data rate to the host. Furthermore, the design permits simultaneous access to both ranks. For example, you combine two DDR5 DIMMs at 4,400 MT/s, but the output results in 8,800 MT/s. According to the presentation, a special data buffer or mux combines the transfers from each rank, effectively converting the two DDRs (double data rate) into a single QDR (quad data rate).

MRDIMM Specifications

| MRDIMM | Data Rate |

|---|---|

| Gen1 | 8,800 MT/s |

| Gen2 | 12,800 MT/s |

| Gen3 | 17,600 MT/s |

First-generation MRDIMMs will offer data transfer rates of up to 8,800 MT/s. After that, JEDEC expects MRDIMMs to improve gradually, hitting 12,800 MT/s and, subsequently, 17,600 MT/s. However, we won't likely see third-generation MRDIMMs until after 2030, so it's a long project.

In conjunction with SK hynix and Renesas, Intel developed Multiplexer Combined Ranks (MCR) DIMMs based on a similar concept to MRDIMM. According to retired engineer chiakokhua (opens in new tab), AMD was preparing a comparable proposition called HBDIMM. Some differences exist; however, no public materials are available to compare MCR DIMM and HBDIMM.

The South Korean DRAM manufacturer expects the first MCR DIMMs to offer transfer rates over 8,000 MT/s, so they are comparable in performance to the first generation of MRDIMM offerings. Intel recently demoed a Granite Rapids Xeon chip with the new MCR DIMMs. The dual-socket system put out a memory bandwidth equivalent to 1.5 TB/s. There were 12 MCR DIMMs clocked at DDR5-8800.

The roadmap for MRDIMMs is vague since it doesn't show when we can expect first-generation MRDIMMs. However, Granite Rapids and competing AMD EPYC Turin (Zen 5) processors will arrive in 2024. Therefore, it's reasonable to expect MCR DIMMs to be available by then since Granite Rapids can use them. Although that hasn't been any official confirmation, it's plausible that Turin could leverage MRDIMMs, given AMD's recent pledge. Therefore, MRDIMMs could potentially arrive in 2024 as well.